Have you ever joined a project without test coverage and wanted to add a new feature or fix a bug? Then, you spent some time, committed the fix, and deployed. Amazing! Five minutes later, bam, ten more bugs! If you've experienced that, you're not alone! In this session, I will explain various types of testing, both for your application layer and infrastructure, and how they can help your tech staff understand what you have and reduce the risk of change. Then, we will dive into writing fast-enough isolated automated integration tests with the help of containers without the need to spin up a long-lasting shared test environment.

Event details can be found on the 3C's Meetup page.

Note: Recording and the presentation (PDF) are available at the end of this article. 👇

Article

Today, I'm going to tell you a story to explain why we need tests. Hopefully, by the end of this story, it will illuminate a path in front of you. After that, we will "write" our first test, and then I will share with you different types of tests and some common problems in this area. Finally, we will see if containers can help!

- Why Test

Let's begin with a story about a hypothetical company named "AmazingLargeCorporate.com," where Lisa is an engineering manager. A while ago, she hired Peter as a programmer. A few days after Peter joined the company, he received his first task: to give all customers a 10% discount!

Like many of us, he was curious, so he dived into the code. After a bit, he found this order class and the

"calculateTotalPrice" method. It simply goes through all the items in the basket, gets the price for each, and

multiplies it by the number of items in the basket. Then, it sums to calculate the total price. Then he says, "Maybe

that's where I should add the discount!"

So, he directly adds the "discount logic" there, commits the code, pushes his changes to the repository, and, with their existing simple CI/CD pipeline, this new change goes directly to production!

He is happy! His manager is happy, and everyone is happy!

A few days later, Lisa shows up in front of his desk and says, "Tax calculations are incorrect! Finance asked me why suddenly we are calculating gross revenue based on the discounted amount instead of the original value!?! By the way, popular items should never be discounted! Now, we also want to give different types of discounts!"

Peter looks into the code again and finds this "Item class". Of course, naming is difficult! 🙃

Then he finds this getter method for price and says maybe this is where I should do something! Or I don't know.

Maybe I should add a global configuration or something.

Uh, I remember from school time that we had these "design patterns".

Can I use any of these patterns to give different types of discounts?

Maybe the strategy pattern? Who knows!

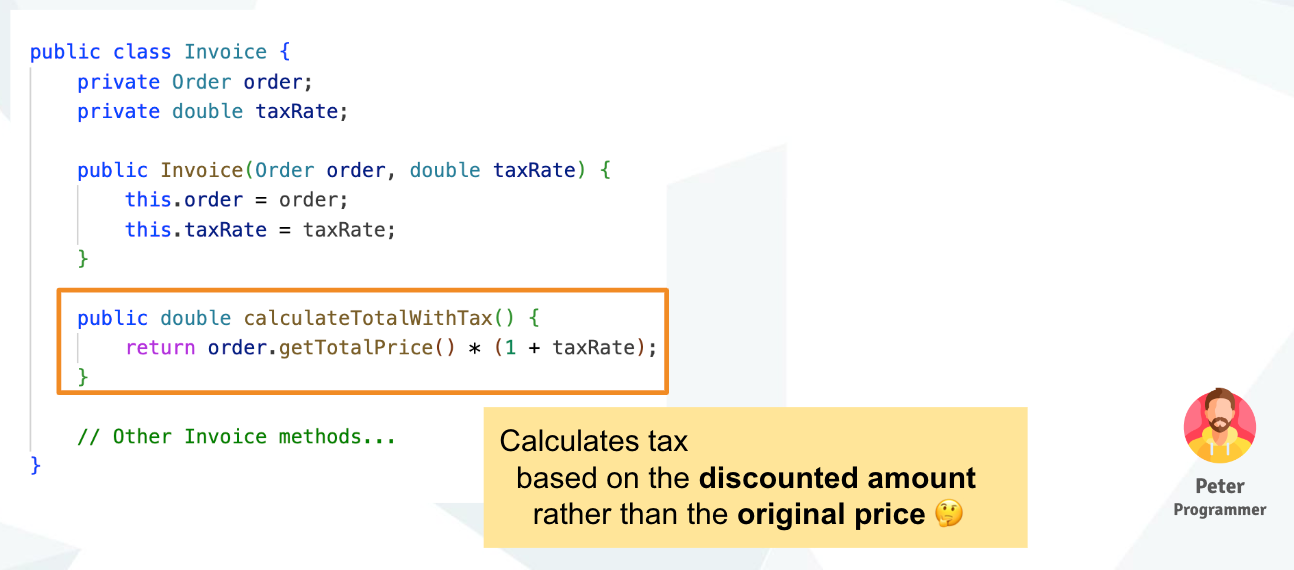

He looks further into the code and finds the "Invoice class." There, he finds a method to calculate tax.

It uses the same method to calculate the tax based on the discounted amount!

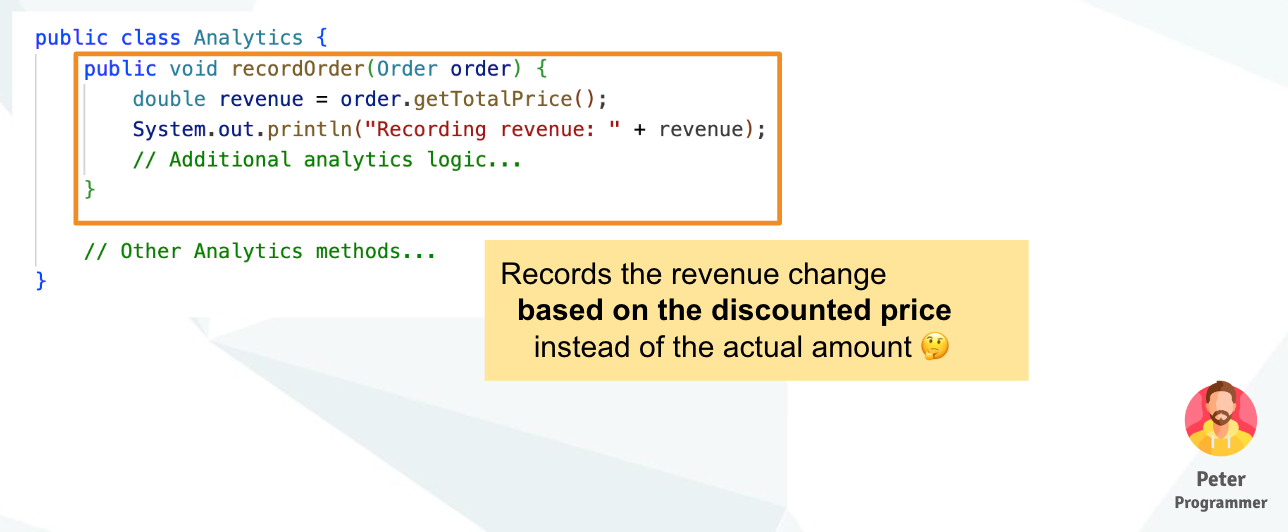

He looks further, finds this "Analytics class," and then finds this "recordOrder" method.

As you can see, we calculate the revenue difference based on the exact same method that he himself changed to

return the discounted amount!

He thinks out loud again and says, maybe I should revert the previous one back and probably create another method.

But still... How can I give different types of discounts!? He starts coding again.

Pushing to production... Finding another error. Again, fixing it!

Finding another error! Fixing it! Pushing it to production...

If this sounds familiar to you, you're not alone!

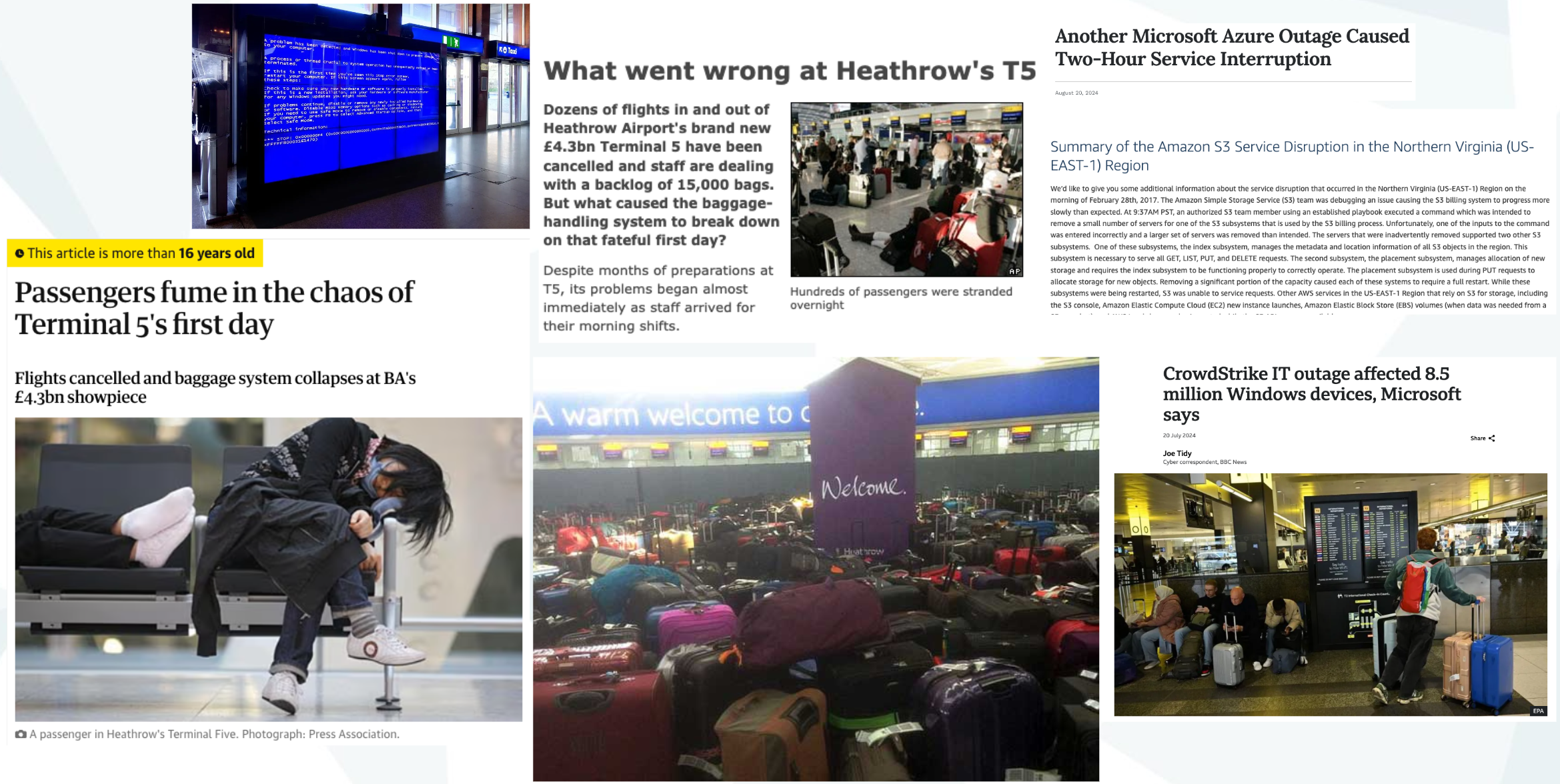

In fact, if you have even checked your LinkedIn profiles around the 20th of July this year (2024), you have probably

seen everyone talking about this Blue Screen of Death, caused by just a few lines of code, in an update that

CloudStrike pushed to their customers globally—an update that caused hundreds of million dollars of loss!

- Our First Test

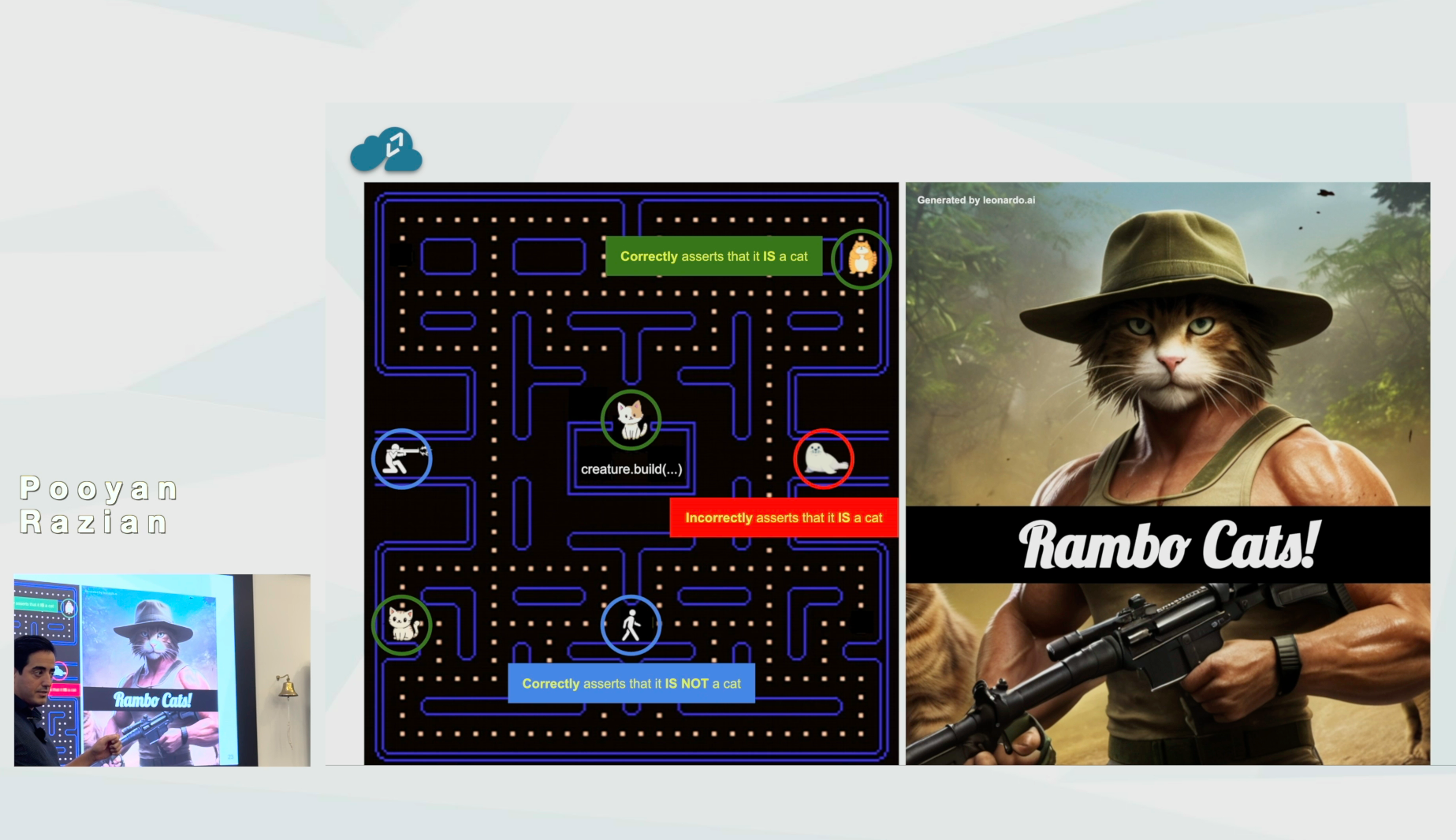

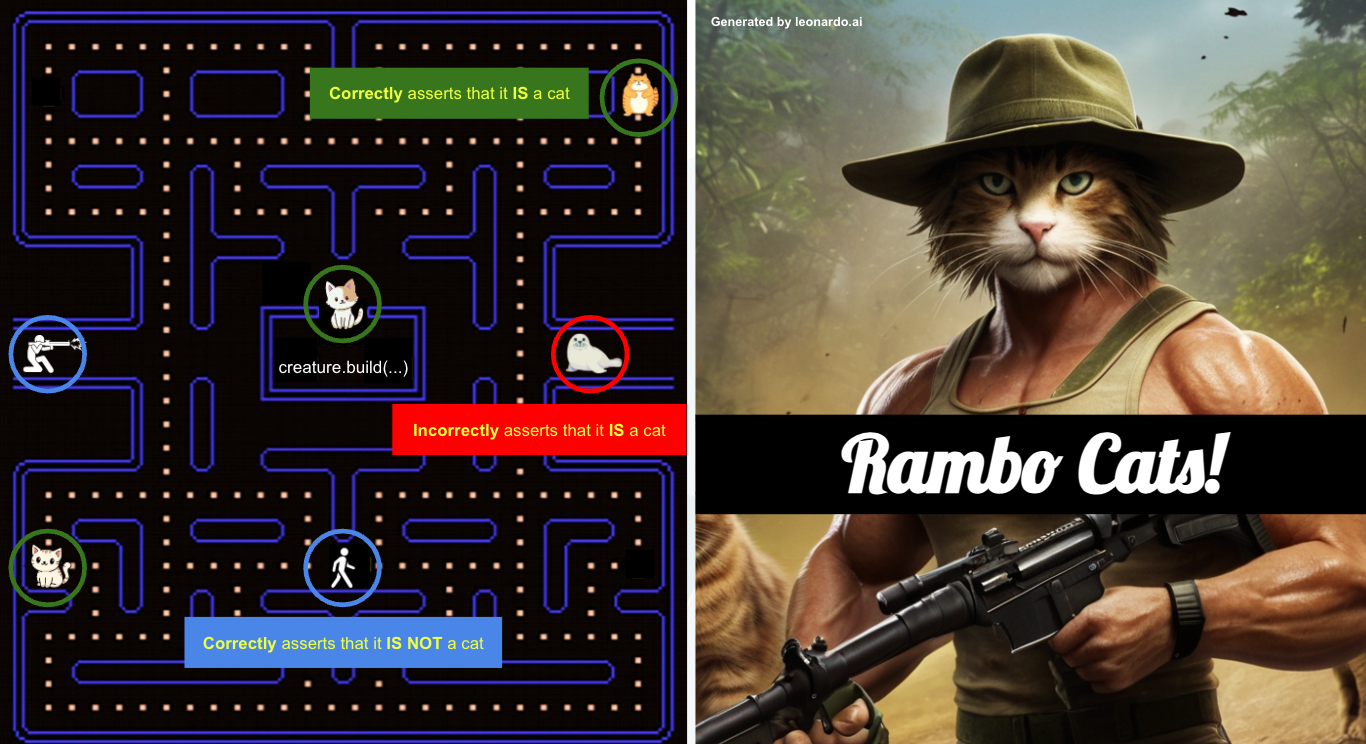

Now that we know why testing is essential let's see if we can write our first test. Imagine you join a gaming

company, and your first task is to create a test for their arcade game named RamboCats!

In this arcade game, you have a builder method (builder method to build a bodybuilder cat 😅) to create different creatures based on the parameters you pass to it. Now, you want to ensure that it "builds" cats correctly.

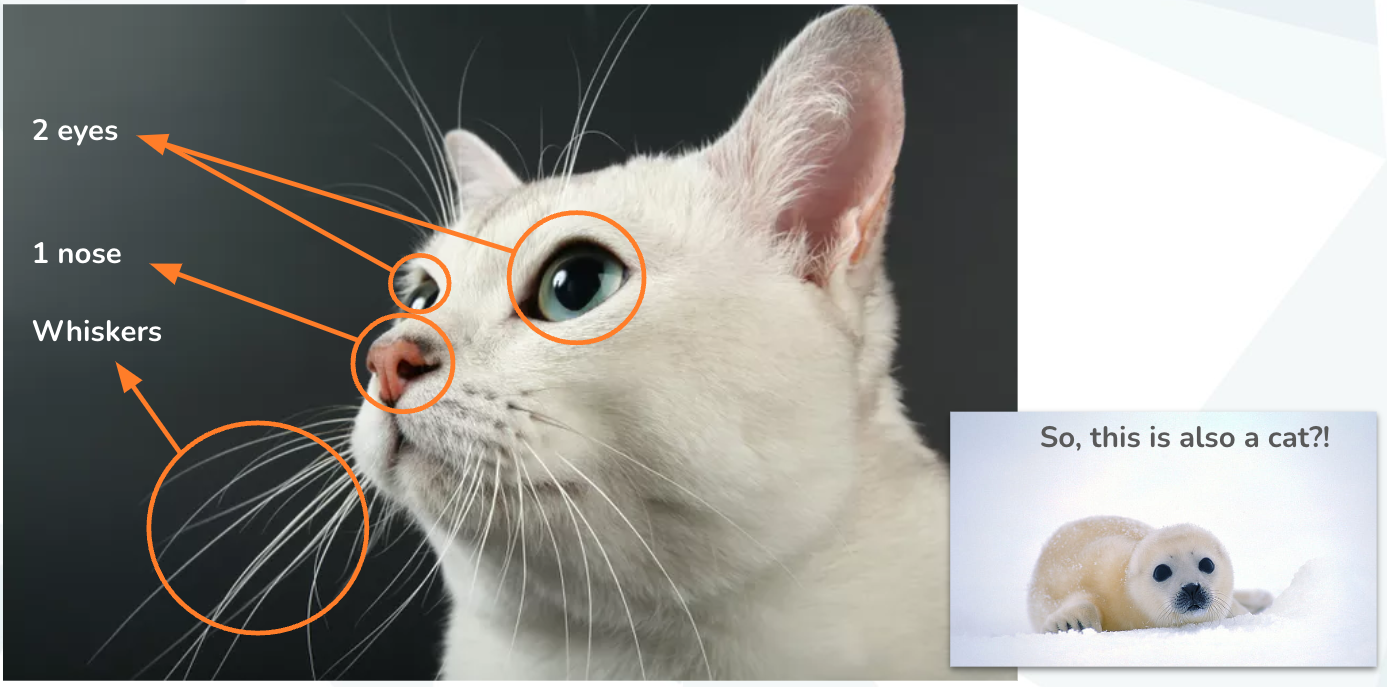

Then you start thinking, "What is a cat?" A cat is an object that has two eyes, one nose, and whiskers. Right?

So, you write your test and say whatever has two eyes, one nose, and whiskers is a cat. Your test correctly asserts that these three cats are cats because they pass all the criteria. These two men are not cats because they don't have whiskers.

Congratulations! You wrote your first test.

Then, you think a bit more and say, "Okay, but if whatever with two eyes, one nose, and whiskers is a cat, then this seal is also a cat!" Right? It has two eyes, one nose, and whiskers.

That means your test will assume this white seal is also a cat! Which is incorrect.

- Types of Tests

Let's see what type of options we have for testing software. We have two general categories:

- Functional testing

- Non-functional testing

Let's begin with 1. functional testing.

Functional testing checks the application's processes against a set of requirements or specifications.

This is what people usually call "test".

The main types of functional testing are unit tests, integration tests, and end-to-end tests. Unit test is usually called "the test"! Some folks even say, "I have written some unit tests," while they just meant some tests in general. With unit tests, you check individual and small parts of your code to ensure they work how they are supposed to. End-to-end testing, conversely, ensures that the entire system does what it is supposed to do. For example, if I buy something from your store, you get your money, and I get my item. Also, if I look at my order history a few months later, I should see that specific order there.

Integration testing is something between these two. You don't need to test the whole system; you don't just want to test a small part of your code. You want to see if these small parts can work together. For example, you might have an API and want to see when it is called with this payload; it creates this item in the database, and when it is called with another payload, it deletes it.

As it sounds, end-to-end testing is much more complex than unit tests because you need to have the whole system up and running. That means you have to spend a lot of resources and prepare the system in the state that every single test wants it to be. You don't have to prepare the whole system for the integration testing, but you still need to run a few parts of it.

Imagine you want to check if an API responds with a 200 HTTP code when called with this payload, 201 with another HTTP verb, and 401 when the user is unauthorized. On top of that, you also want to see if it creates/deletes an item in the database or if it will block the user when she is unauthorized. That means, in the integration test, depending on what you test, sometimes you consider the system as a black box and check the interface. On the other hand, sometimes you want to check if something has changed in the database, message bus, etc.

We also have UI testing. If you have a web application, a mobile application, or even a system that sends marketing emails, you want to see not only how they look but also how they function when someone presses a button, etc.

Now, you might say, "Okay, but how many of these tests should I have?!"

This is not set in stone, but if you think about it as a business, you don't want to spend too much time/money/resources on something that will not bring much back to you. Remember, time is money, and complexity needs time. So, as a general practice, most projects end up having a lot of unit tests, a few integration tests, and fewer E2E and UI tests.

Okay, now how to run these tests?

You need to know what is happening in your system for the unit and integration tests, so your tooling depends on your programming language. For E2E and UI testing, you don't care about the program language. All you care is that if I press on this button or call this API this way, blah blah workflow will be triggered or not. So you use automation tools like Selenium, Playwright, etc.

You can also manually test your APIs using Wget if you are a CLI geek or Postman if you prefer a nice UI. This way, you can also "kind of automate" them.

Good news! Even though we talked about software, you can treat your infrastructure definition as software if you use the new generation of Infrastructure as Code, which is called Infrastructure as Software, like CDK or Pulumi. Then, you can write unit tests, snapshot tests, etc., to ensure your infrastructure is created how you expect it to be.

In the talk, I asked the audience to answer a poll about what types of testing they have in their projects.

The result is as follows:

Now that we know, at least two or three people write unit tests without integration tests...

Next time you write a unit test and think we don't need integration tests, remember this:

Other types of functional testing:

- Sanity test (Smoke testing)

Smoke testing is a quick test to see if the application can be built and run after each small change. We do this to quickly check if these small changes don't break the whole thing.

- Regression testing

Regression testing ensures that when you fix a bug, you don't open another one.

- Beta/User Acceptance testing (UAT)

We also have UAT testing. You send your application to a limited number of either users or testers to hopefully test everything. This is a bit tricky because a limited number of users/testers might be unable to test every single scenario that everyone using your application will face.

- Data Race detection & concurrency testing

Your code might have data race issues in programming languages that support concurrency, like Go.

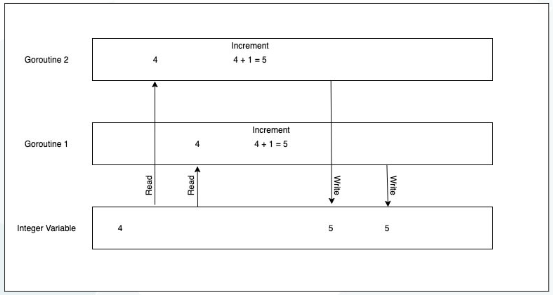

What is data race?

Data race happens when you have a variable that two different threads have write access to, and accessing it needs to

be sequenced.

For example, imagine having an integer variable shared between two threads.

The first thread reads the value, which is 4, and then adds 1. It becomes 5. Then it writes 5 to it.

The second thread reads the value simultaneously, which is still 4, and then adds 1.

It becomes 5. Then it writes 5 to it. Now, instead of 6, we got 5.

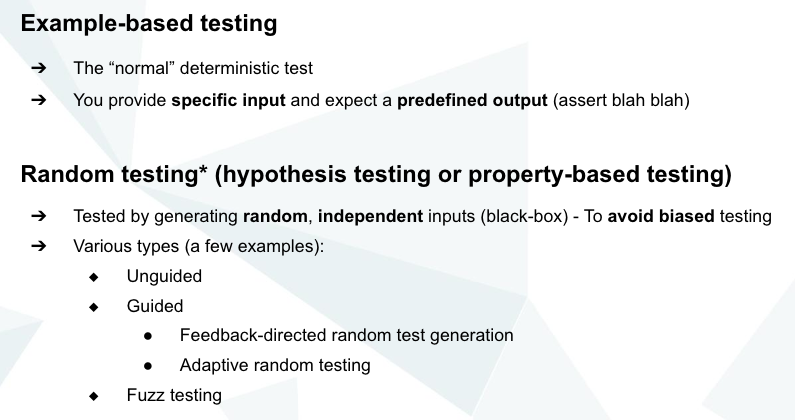

Input strategies

Example-based testing is basically what we usually call "testing"! It is when we provide a pre-defined input and assert that blah blah will happen.

In addition, we have random testing, hypothesis testing, and property-based testing. For example, if my API accepts an

email address or first name, instead of providing my name and email address, I use a faker that randomly gives a name,

email, etc.

I can configure it to provide values from different locales and see if something fails.

I used to think this was redundant, and no one needed it until it showed me cases I'd never even considered! This can help you find edge cases that you might not have thought about before they happen in production. These kinds of tests are usually used to avoid our biases in choosing test data.

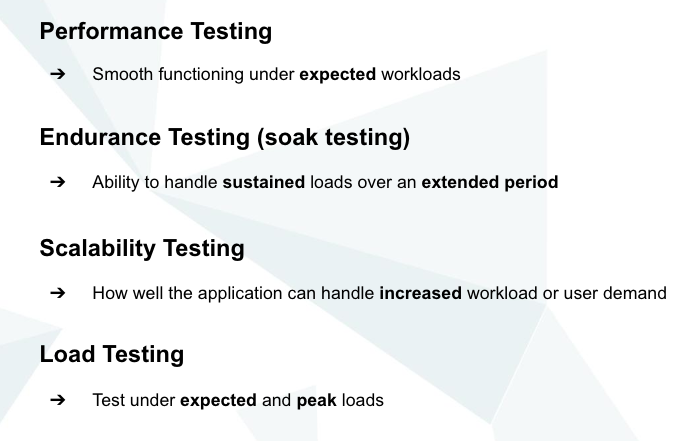

Now, 2. Non-Functional testing.

Non-functional tests asses the application properties that aren't critical to the functionality but contribute to the end-user experience. What it means is that your system works fine, probably even with no errors!, while your end-users are crying! These tests reduce the chance of this happening.

What it means is that, your system works fine with no error, but your users are crying! These tests, reduce the chance of this happening.

We have one big category of "reliability under load" that checks if your solution works well under expected, unexpected,

or peak load over a short period or an extended period.

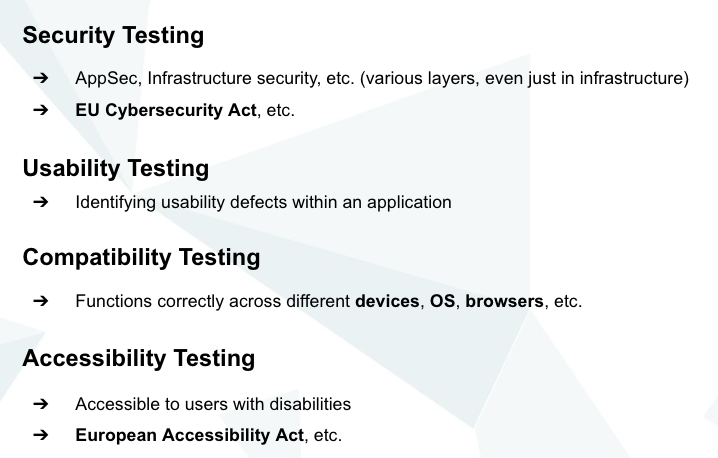

We also have security testing, usability testing, compatibility testing, and other related activities.

For example, we use static code analysis to determine whether the application's codebase is vulnerable or whether

the infrastructure definition exposes security issues.

- Common Problems

Now that we have discussed the different types of testing, let's examine the common problems. If you have worked with legacy code, you know how difficult it is to test a legacy system—especially if it is from the Dinosaur era of the 1990s! Because there was no framework to help automate tests back then.

So, now you say I'm happy that we live in the modern era! Right? But testing modern software architecture is also hard! Why?

Even though we have all these tools, the problem is that with the new types of distributed architectures, instead of just testing one system, we have to check different parts of the whole solution.

For example, what if something goes wrong with the message bus? Or what if the message queue sends the same message multiple times? Can my software handle it idempotently? What if it sends the messages in a different order? What if we have one request to block all transactions from this malicious user and another one to command giving away $1 million? Will it give the $1 million first and then block? Or will it wait to receive the previous message first?

We also have challenges with eventual consistency in modern, scalable databases. For example, what if I use DynamoDB and my application writes into it? When 4 out of 6 nodes write the data, it says, "Okay, I'm done!" But then you read it, and it's not there!

Thanks to "Agile" and hundreds of misunderstandings around "Scrum," we usually have rapid changes in the requirements, which leads to a high volume of taste maintenance. When you write a test, you have to maintain it like you maintain your code. So, every time the logic changes, you have to check all your related unit tests, integration tests, etc., and update them all. Imagine this happens 10 times a month!

This usually happens because, unfortunately, many non-technical managers see Agile as "fast, fast, fast" -- which is incorrect! Agile is about being able to adapt to changes, not about being fast by constantly making random changes!

It is also difficult to test nonfunctional requirements.

For example, what is secure? I might say this beautiful app is highly secure because I cannot hack into it, but will a hacker say the same? So, how can I say I've tested it to be safe? Also, I might check an application and say this one works fine when five users work with it, so I can say that it is highly scalable! Five users! On the other hand, should your application from a newly established startup be able to scale up to the Google level? So, what is scalability? How many users should it be able to serve, and with what response time?

It is also difficult to provide data for all these different scenarios. For each test, I should probably change the requester user, the system state, etc.

Test coverage is also a big question. Should I aim for a 100% test coverage? How many happy and unhappy paths should I cover? Still, can I be 100% confident that nothing will go wrong?

Flaky tests are also a big problem. If a test sometimes passes and sometimes fails, you cannot trust it. This usually happens when your tests depend on a third-party system or its APIs.

Inconsistency between environments is also a big problem. For example, my tests pass in Dev and Staging, but the whole solution fails in production! Why? I created a new IAM role in my Dev and Staging environments, but I could not do it in production as I didn't have access and we didn't have Infrastructure as Code.

Tests can also potentially affect each other. This happens when you use a shared environment and multiple tests run in parallel.

Over-reliance on unit tests and avoiding other types of tests is also a problem, especially when non-technical managers push teams to skip them with excuses like "we don't have time or resources."

Also, it is quite common for folks to skip a test when it fails the shared pipeline.

What if we forget to add it back?

So, shall we avoid testing? Then, we are back to the first step! 😏

- Can Containers Help

Let's see if containers can help! Now, imagine you have joined a company and you own this "my service" service and the database it has. You also have a few downstream services, for example, another service, a message bus, and a few AWS services like S3 for object storage.

You need all these resources in your local environment and for your integration tests.

What are the challenges?

Before running tests, you have to run an environment. You have to ensure it is up and running and everything is pre-configured in a desired state. What user should be presented in the database? What order should exist in the database, etc.

We should also avoid non-deterministic tests, data corruption, and configuration drift.

One common way is to use a shared environment (UAT, test, etc.), but the problem with non-deterministic tests still exists.

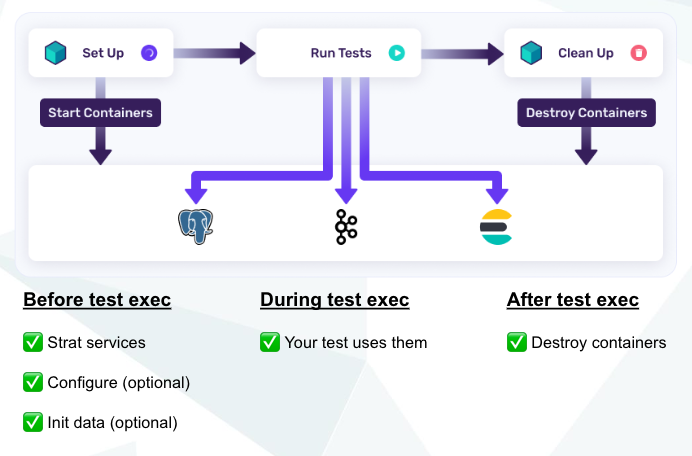

A better alternative is to run your test in separate Docker containers so that you will have reliable and repeatable tests. That means that every time you run your tests in the "setup" phase, it will start all the downstream services for you, configure them, initialize data, and make everything ready for your test to run. Then, it will run the test and clean up everything. That means you will create an isolated environment on-demand, so you will have consistent experience everywhere. Locally, in the CI, or even someone else's machine! Also, you probably want to run these tests in parallel, so your tooling should be able to assign different random ports to these containers and wait for them to be ready. Note that if you use share containers, you will still have the same problem as running your tests in any other shared environments.

These tools should also be able to automatically clean up; otherwise, you will end up with many containers staying

up and running on my machine after each test, wasting your resources!

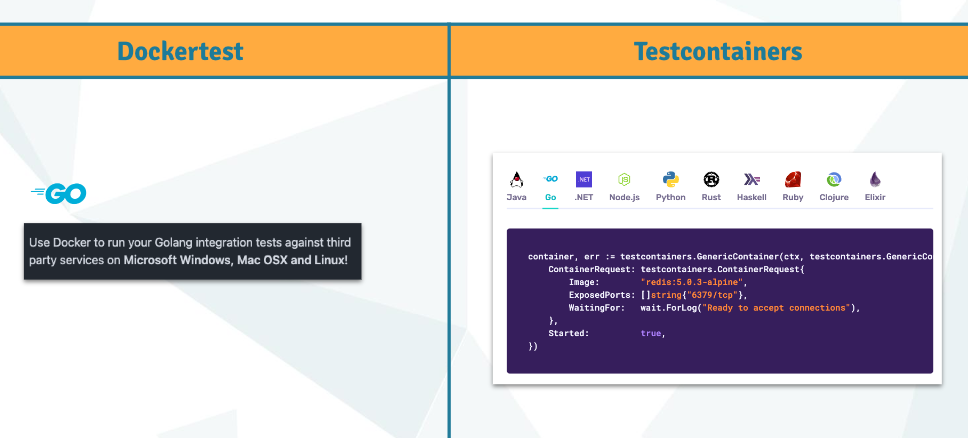

As far as I'm aware, in 2024, we have two options: DockerTest and TestContainers. Dockertest is Golang-specific, while test containers are the new ones and support many different programming languages.

Let's talk again about the hypothetical company "AmazingLargeCorporate.com". Like many other companies, they started

with a monolith solution with Java. Why Java? Because it has a fantastic ecosystem and is an OOP language. Then,

with the hype of microservices, they eventually changed their architecture, but still with Java. Why? Because they

were a Java company. But builds were too slow, and now they had the complexity in each of their services and the

distribution! So, they said, "Okay, let's try Golang!" Peter put on his Go hat and built a few services.

Then, he wanted to add integration tests. He used Dockertest, added random ports to different services at the setup stage, and then ran the test. Then, he wrote his tests in a Table-driven way, so he could run the same test under different conditions.

He ended up with a large CI/CD pipeline in which he tested everything.

If you are interested in reading more about CI/CD Pipeline Best Practices,

check

out this article.

What options do I have?

Currently, in 2024, you have two options: DockerTest and TestContainers.

Thank you!

Thank you for reading all the way through! If you liked the talk or this article, please share it with your friends and colleagues. If you have any questions or feedback, please feel free to connect and reach out to me on LinkedIn.

Who am I?

I'm A Solution Architect consultant at Kodlot.

This is my face when I debug my code!

That's my laser, but sometimes it doesn't work...

I'm a curious person who happens to like hiking, especially hiking above the clouds! That's probably why I chose to work with the Cloud!

I love Linux and support the GNU and open-source movements. I've been a programmer for many years, working with different programming languages, including, but not limited to, Python, typescript, and Golang. I've been working with AWS Cloud for 5-6 years now.

Before then, a long long long time ago, I used to be a Linux admin. I'm' one of those lucky guys who could go to a Data Center and see what's happening there, which is something I'm very proud of.

These days, I'm more of a Hands-On Solutions Architect. I join different projects to see what the problem is (hopefully 😀), come up with a few possible Solutions, and help the client by building PoCs, evaluating different options, and then helping them implement the solution. I draw architectural diagrams, but that's not the only thing I do.

If you are interested in learning more about me, please check out my portfolio at pooyan.info.

The event

Recording (YouTube)

Presentation file (PDF)

If the file is not loaded here, please check out this post on my LinkedIn.